The Curse of Self-Service

These days, we seem to be high on data and data related trends. My opinion on Big Data should be well known to my readers: it is something that has to be carefully managed and largely a fad for all but a select few companies.

With data being the new black, similar trends grab the attention of modern managers. One of these is Self Service. It seems like such a logical consequence of our advanced data visualisation: democratise the data.

It’s worth noting that the notion of humans making better decisions when well served with information is rather old. Thomas Jefferson said: “whenever the people are well-informed, they can be trusted with their own government”. But what exactly does it mean to be well-informed? Another great statesman, Churchill, said: “The best argument against democracy is a five-minute conversation with the average voter”.

In this blog entry, I will argue that is does not follow that humans will make better decisions if we just give them access to more data. In fact, allowing people to self-service their data can be outright harmful.

Myth Busting Query Optimisation

It’s been some time since I posted here, but recent customer events prompted me to write up some notes I have been taking the past years.

It’s been some time since I posted here, but recent customer events prompted me to write up some notes I have been taking the past years.

The problem I would like to talk to you about is one that is well examined and well understood, yet continues to create frustrations with customers. Unfortunately, some of these frustrations come from “best practices” that people apply consistently, which in turn consistently gets them into trouble.

When you are done reading this post, I hope you will have a better understanding on how to avoid these pitfalls. It is also my hope that some of your expectations about query optimisation will have been adjusted.

Bottleneck Diagnosis on SQL Server – New Scripts

Finally, I have found some time with my good colleagues at Fusion-io to work on some of my old SQL Scripts.

Our first script queries the servers for wait stats – a very common task for nearly all SQL Server DBAs. This is the first thing I do when I meet a new SQL Server installation and I have generally found that I apply the same filters over and over again. Because of this, I worked with Sumeet Bansal to standardise our approach to this.

You can watch Sumeet introduce the script in this YouTube video: http://youtu.be/zb4FsXYvibY. A TV star is born!

We have already used this script at several customers in a before/after Fusion-io situation. As you may know, the tuning game changes a lot when you remove the I/O bottleneck from the server.

Based on our experiences so far, I wanted to share some more exotic waits and latches we have been seeing lately.

Quantifying the Cost of Compression

Last week, at SQL Saturday Exeter, I did a new Grade of Steel experiment: to quantify just how expensive it is to do PAGE compression.

The presentation is a 45 minute show, but for those of you who were not there, here is a summary of the highlights of the first part.

My tests were all run on the TPC-H LINEITEM table at scale factor 10. That is about 6.5GB of data.

Test: Table Scan of Compressed Data

My initial test is this statement:

SELECT MAX(L_SHIPDATE)

, MAX(L_DISCOUNT)

, MAX(L_EXTENDEDPRICE)

, MAX(L_SUPPKEY)

, MAX(L_QUANTITY)

, MAX(L_RETURNFLAG)

, MAX(L_PARTKEY)

, MAX(L_LINESTATUS)

, MAX(L_TAX)

, MAX(L_COMMITDATE)

, MAX(L_RECEIPTDATA)

FROM LINEITEM

Because the statement only returns one row, the result measured does not drown in client transfer time. Instead, the raw cost of extracting the columns from the compressed format can be quantified.

The result was quite shocking on my home 12 core box:

Even when doing I/O, it still takes quite a bit longer to scan the compressed format. And when scanning from DRAM, the cost is a whopping 2x.

A quick xperf run shows where the time goes when scanning from memory

Indeed, the additional CPU cost explains the effect. The code path is simply longer with compression.

Test: Singleton Row fetch

By sampling some rows from LINEITEM, it is possible to measure the cost of fetching pages in an index seek. This is the test:

SELECT MAX(L_SHIPDATE)

, MAX(L_DISCOUNT)

, MAX(L_EXTENDEDPRICE)

, MAX(L_SUPPKEY)

, MAX(L_QUANTITY)

, MAX(L_RETURNFLAG)

, MAX(L_PARTKEY)

, MAX(L_LINESTATUS)

, MAX(L_TAX)

, MAX(L_COMMITDATE)

, MAX(L_RECEIPTDATA)

FROM LI_SAMPLES S

INNER LOOP JOIN LINEITEM L ON S.L_ORDERKEY = L.L_ORDERKEY

OPTION (MAXDOP 1)

This gives us the plan:

Which has the desired characteristics of having the runtime dominated by the seek into the LINEITEM table.

The numbers again speak for themselves:

And again, the xperf trace shows that this runtime difference can be fully explained from longer code paths.

Test: Singleton UPDATE

Using the now familiar pattern, we can run a test that updates the rows instead of selecting them. By updating a column that is NOT NULL and an INT, we can make sure the update happens in place. This means we pay the price to decompress, change and recompress that row – which should be more expensive than reading. And indeed it is:

Summary

Quoting a few of my tests from my presentation, I have shown you that PAGE compression carries a very heavy CPU cost for each page access. Of course, not every workload is dominated by accessing data in pages – some are more compute heavy on the returned data. However, in these days when I/O bottlenecks can easily be removed, it is worth considering if the extra CPU cycles to save space are worth it.

It turns out that it is possible to also show another expected results: that locks are held longer when updating compressed pages (Thereby limiting scalability if the workload contains heavily contended pages). But that is the subject of a new blog entry.

More Courses Available – in Sweden and UK!

I am happy to announce that my tuning course and newly developed Data Warehousing course is now available.

Tuning/Scaling Course

The tuning course will be hosted by CrossJoin Consulting in London, UK You can sign up for it here:

The tuning course will be hosted by CrossJoin Consulting in London, UK You can sign up for it here:

- Tuning Course in London (13th June 2013)

You can still make it for the early bird rates. This course is probably THE deepest level course about SQL Server out there. You will learn the nitty-gritty details of xperf profiling, spinlock detection and multi-threaded optimisation.

We are going to be digging deep below the surface of SQL Server here, be prepared for an intensive day.

Data Warehousing Course

The next data warehousing course will be in Kista, Sweden. It will be hosted by SolidQ and you can sign up for it here:

The next data warehousing course will be in Kista, Sweden. It will be hosted by SolidQ and you can sign up for it here:

-

Data Warehousing in Sweden (7th February 2013)

This will be a very unique course with both Davide Mauri and myself teaching. Its a one day intensive training in my usual “no nonsense” style where you learn about data modeling from two of the leading experts in the field. The course extends the ideas I have blogged extensively about here.

There are still seats left, but they are going fast.

I look forward to seeing you there!

VM-ware Shared folders are really Slow

I am currently waiting for some code to compile and found a bit of time to type up a quick blog.

As described in a previous post, I am have set up my laptop to host Windows in a virtual machine guest with Mac OX (Mountain Lion) as the host.

Today, I needed to compile some code and thinking I was being clever, I put the code on the host OS (OSX) and shared the folder via VM-ware to the Windows guest OS.

Compiling from inside VM-ware

I ran my msbuild process from the shared folder, and it took FOREVER to compile. The obvious choice here is of course to blame virtualisation itself – after all, I only have 2 cores allocated to the virtual.

But not so fast! Our tuning knowledge comes in quite handy here. Have a look at the CPU pattern while I am compiling:

Just like with SQL Server, I start my tuning at a very high level (in this case, task manager) and dig in from there.

The first question we ask ourselves as tuners is: Does what I see make sense?

In this case, it obviously doesn’t. MSBUILD is set up to build highly parallelised, it should be using my cores and there are no obvious I/O bottleneck in the system. Having 50% of two cores busy (and with high kernel times) looks a lot like a single threaded bottleneck to me. The build was taking over 15 minutes, which was much longer than expected.

Diagnosing the Problem – our friend Xperf

Normally, I use xperf to troubleshoot servers. But it sure comes in handy for misbehaving client machines too.

Task manager only shows that the time is spend in the process d.exe – which is part of the build process. Is the compiler bad or must we look elsewhere? Sure would be surprising if the compiler used all the kernel time wouldn’t it?

Here is the quick and dirty CPU “zoom in” xperf command to get the details we need:

- xperf –on latency –stackwalk profile

- …wait a bit

- xperf –d <myfile>.etl

This captures a sample of the stack and CPU usage of each process and kernel module. From here, it is quite clear what is going on – let me walk you through the analysis.

First, open the trace with xperfview. I recommend staying with the Win7 version of xperfview, as the Win8 interface is… well… a Win8 interface.

Pick the CPU Sampling per CPU, right click and choose Summary Table:

From here, pick the columns: Module, CPU and % Weight which allows you to summarise by module. On my box, it looks like this:

Aha!… Most of the CPU burn goes in vmci.sys (just ignore intelppm.sys). This isn’t a part of Windows. Its relatively easy to trace this file back to VM-ware.

So, who calls into this kernel module? Adding the stack column after the module, we can see that too:

Eureka: It is file system access that is causing the slowdown. See the call stack? Starts from GetFileAttributesW and ends up inside vmci.sys.

Fixing the problem

Now, before you go ahead and conclude that VMware adds a horrible overhead to I/O, lets just try to move the source files into the guest OS itself. Recall that my machine is using VM-ware shared folders to access the source code. It might simply be the sharing framework that is acting strange…

The results of using the guest OS’s file system is staggering. Running the build process now looks like this at the CPU level:

And the total build time is down from over 15 minutes to less than 3 minutes.

Thank you xperf…

At this point, we are reduced to guessing what is going on

Configuring Kernel Debugging with WinDbg and a NULL modem

Lately, I have been digging deep into Windows to get really low level with the the I/O path SQL Server takes (yep, there is an even deeper layer to understand fully).

Lately, I have been digging deep into Windows to get really low level with the the I/O path SQL Server takes (yep, there is an even deeper layer to understand fully).

Once you start playing around with the Windows Kernel, you will at some point need kernel level debugging set up. Traditionally, this is something I have used a Windows machine for, and even there, it can be painful to get working. As you may know, I have switched to Mac as my client machine and my Windows utilities (including WinDbg) now run in VMWare Fusion – it looked like I was heading into an interop nightmare…

Windows 8 allows kernel debugging directly over the network card, but how does one configure kernel debugging with a Windows 2008R2 target from a Mac with VM Ware?

I found a very cheap solution today. You need:

- A USB to Serial (RS-232) converter

- A NULL modem (I would recommend getting a long one, so you don’t have to sit next to the server)

- A target server that you want to debug

- A serial port on the target server

- WinDbg from the Windows SDK in a virtual machine on the Mac

- Symbols set up as per my previous post

A USB converter and a NULL modem is a dirt cheap way to get the required hardware for kernel debugging. I sourced my cables from Maplins (Thanks @SQLServerMonkey) in the UK – for a total of around 20 GBP.

Step 1: Prepare the Client/Debugger

A Macbook air, like most other lightweight laptops, does not have a serial port. So, we have to use a USB/Serial converter. It is possible to debug directly over USB, but good luck with that from a Mac, I didn’t have the courage.

Make sure VMWare routes the USB device to the Windows Guest OS and not the Mac. It will look something like this in VMWare Fusion 5:

Now, install WinDbg and set up your symbol paths if they are not set up already.

Check your Device Manager in the client to see which COM port the USD device created. As you can see below, my laptop mounted the USB/Serial converter as COM3

After installing the device, I had to restart my virtual machine before VMWare would let me mount it – but what can you expect from a 10 GBP component? Your mileage may vary depending on the serial/USB driver you have.

Step 2: Connect Client and Server

Using the NULL modem, connect the USB/Serial converter to the server’s Serial port.

Make sure the server has the serial port enabled in the BIOS (my Dell box had it disabled, had to re-enable).

Step 3: Configure Server/Debugee for kernel debugging

Log into the server, start a command line as administrator

First, copy the default startup options into a new boot option:

- BCDEDIT /copy {current} /d DebugMode

This will create a new entry in the boot list when the server starts. Make a note of the GUID returned or copy it to the clipboard (using the ever so annoying copy/paste function in the Windows command prompt)

Next, configure the parameters for serial cable debugging:

- BCDEDIT /set <GUID> debugport <port #>

- BCDEDIT /set <GUID> debugtype serial

- BCDEDIT /set <GUID> baudrate 115200

Replace the <GUID> the guid returned previously. Set <port #> to the COM port the NULL modem is connected to in your server (NOT the COM port of the client). For example, if the server has the NULL modem in COM2, set <port #> to 2.

Finally, enable debugging for on the newly created boot option:

- BCDEDIT /debug <GUID> ON

Validate that your configuration works by running:

- BCDEDIT /v.

You should get an output somewhat like this (this is also how you find the GUID if you didn’t note it down before):

If you want to make sure debugging is always turned on (great for a sandbox machines where you explore stuff in the kernel) you can use BCDEDIT /default <GUID> to make debugging the default startup option.

Step 3: Start Debugging

You are now ready to start debugging the windows kernel on the server. Here is how:

On the client, start WinDbg and choose File—>Kernel Debug (or CTRL+K) and set up the com port you got in step 1:

Press OK, and reboot the server. If you didn’t select the debug configuration as the default boot option, make sure you pick it when the server starts.

If you have done things right, you will get something like this in WinDbg (below, I broke execution with CTRL+C)

One thing to note when you are debugging the kernel: Not all your typical WinDbg commands work as they normally do (for some good reasons), but that is outside of scope for this blog entry.

Time to dig in even deeper… my Macbook Air to a Windows box :-)…Happy hacking everyone.

One Million IOPS on a 2 socket server

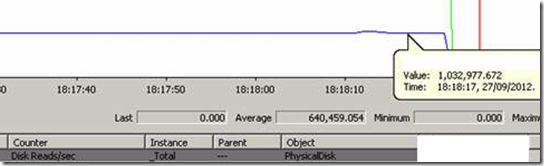

Today, using Fusion ioMemory technology, I worked with our team of experts to hit 1M 4K random read IOPS on Windows. We did this on a 2-socket Sandy Bridge Server.

Below is the screenshot to prove it:

Think for a moment about what it will take to actually make use of all those IOPS. If this is the type of speed you have at your disposal, maybe it is time to rethink what is possible. Check out our SDK at http://developer.fusionio.com for the leading edge work Fusion-io is doing in this space.

As I am sure my regular readers can guess, I am loving my new job!

First publicly available courses –in Aarhus, Denmark – 23-24 October

I am pleased to announce that both my Tuning Course and the Data Warehouse Modeling course will be run in Aarhus Denmark. The courses will be held in English, even though they are hosted in the very city I was born.

The course will be hosted by Orangeman, who will be handling the logistics. There are still available spots and if you act fast, you can get the early bird price.

The relevant details:

- 23rd October 2012: Tuning Course

- 24th October 2012: Data Warehouse modeling

See you there for some intense days of tuning and modeling.