Archive

Synchronisation in .NET– Part 4: Partitioned Data Structures

In this final instalment of the synchronisation series, we will look at fully scalable solutions to the problem first stated in Part 1 – adding monitoring that is scalable and minimally intrusive.

Thus far, we have seen how there is an upper limit on how fast you can access cache lines shared between multiple cores. We have tried different synchronisation primitives to get the best possible scale.

Throughput this series, Henk van der Valk has generously lent me his 4 socket machine and been my trusted lab manager and reviewer. Without his help, this blog series would not have been possible.

And now, as is tradition, we are going to show you how to make this thing scale.

Synchronisation in .NET– Part 3: Spin Locks and Interlocks/Atomics

In the previous instalments (Part 1 and Part 2) of this series, we have drawn some conclusions about both .NET itself and CPU architectures. Here is what we know so far:

- When there is contention on a single cache line, the lock() method scales very poorly and you get negative scale the moment you leave a single CPU core.

- The scale takes a further dip once you leave a single CPU socket

- Even when we remove the lock() and do thread unsafe operations, scalability is still poor

- Going from a class to a padded struct gives a scale boost, though not enough to get linear scale

- The maximum theoretical scale we can get with the current technique is around 90K operations/ms.

In this blog entry, I will explore other synchronisation primitives to make the implementation safe again, namely the spinlock and Interlocks. As a reminder, we are still running the test on a 4 socket machine with 8 cores on each socket with hyper threading enabled (for a total of 16 logical cores on each socket).

Synchronisation in .NET– Part 2: Unsafe Data Structures and Padding

In the previous blog post we saw how the lock() statement in .NET scales very poorly when there is a contention on a data structure. It was clear that a performance logging framework that relies on an array with a lock on each member to store data will not scale.

Today, we will try to quantify just how much performance we should expect to get from the data structure if we somehow solve locking. We will also see how the underlying hardware primitives bubble up through the .NET framework and break the pretty object oriented abstraction you might be used to.

Because we have already proven that ConcurrentDictionary adds to much overhead, we will focus on arrays as the backing store for the data structure in all future implementations.

Synchronisation in .NET– Part 1: lock(), Dictionaries and Arrays

As part of our tuning efforts at Livedrive, I ran into a deceptively simple problem that beautifully illustrates some of the scale principles I have been teaching to the SQL Server community for years.

In this series of blog entries, I will use what appears to be a simple .NET class to explore how modern CPU architectures handle high speed synchronisation. In the first part of the series, I set the stage and explore the .NET lock() method of coordinating data.

Bottleneck Diagnosis on SQL Server – New Scripts

Finally, I have found some time with my good colleagues at Fusion-io to work on some of my old SQL Scripts.

Our first script queries the servers for wait stats – a very common task for nearly all SQL Server DBAs. This is the first thing I do when I meet a new SQL Server installation and I have generally found that I apply the same filters over and over again. Because of this, I worked with Sumeet Bansal to standardise our approach to this.

You can watch Sumeet introduce the script in this YouTube video: http://youtu.be/zb4FsXYvibY. A TV star is born!

We have already used this script at several customers in a before/after Fusion-io situation. As you may know, the tuning game changes a lot when you remove the I/O bottleneck from the server.

Based on our experiences so far, I wanted to share some more exotic waits and latches we have been seeing lately.

Quantifying the Cost of Compression

Last week, at SQL Saturday Exeter, I did a new Grade of Steel experiment: to quantify just how expensive it is to do PAGE compression.

The presentation is a 45 minute show, but for those of you who were not there, here is a summary of the highlights of the first part.

My tests were all run on the TPC-H LINEITEM table at scale factor 10. That is about 6.5GB of data.

Test: Table Scan of Compressed Data

My initial test is this statement:

SELECT MAX(L_SHIPDATE)

, MAX(L_DISCOUNT)

, MAX(L_EXTENDEDPRICE)

, MAX(L_SUPPKEY)

, MAX(L_QUANTITY)

, MAX(L_RETURNFLAG)

, MAX(L_PARTKEY)

, MAX(L_LINESTATUS)

, MAX(L_TAX)

, MAX(L_COMMITDATE)

, MAX(L_RECEIPTDATA)

FROM LINEITEM

Because the statement only returns one row, the result measured does not drown in client transfer time. Instead, the raw cost of extracting the columns from the compressed format can be quantified.

The result was quite shocking on my home 12 core box:

Even when doing I/O, it still takes quite a bit longer to scan the compressed format. And when scanning from DRAM, the cost is a whopping 2x.

A quick xperf run shows where the time goes when scanning from memory

Indeed, the additional CPU cost explains the effect. The code path is simply longer with compression.

Test: Singleton Row fetch

By sampling some rows from LINEITEM, it is possible to measure the cost of fetching pages in an index seek. This is the test:

SELECT MAX(L_SHIPDATE)

, MAX(L_DISCOUNT)

, MAX(L_EXTENDEDPRICE)

, MAX(L_SUPPKEY)

, MAX(L_QUANTITY)

, MAX(L_RETURNFLAG)

, MAX(L_PARTKEY)

, MAX(L_LINESTATUS)

, MAX(L_TAX)

, MAX(L_COMMITDATE)

, MAX(L_RECEIPTDATA)

FROM LI_SAMPLES S

INNER LOOP JOIN LINEITEM L ON S.L_ORDERKEY = L.L_ORDERKEY

OPTION (MAXDOP 1)

This gives us the plan:

Which has the desired characteristics of having the runtime dominated by the seek into the LINEITEM table.

The numbers again speak for themselves:

And again, the xperf trace shows that this runtime difference can be fully explained from longer code paths.

Test: Singleton UPDATE

Using the now familiar pattern, we can run a test that updates the rows instead of selecting them. By updating a column that is NOT NULL and an INT, we can make sure the update happens in place. This means we pay the price to decompress, change and recompress that row – which should be more expensive than reading. And indeed it is:

Summary

Quoting a few of my tests from my presentation, I have shown you that PAGE compression carries a very heavy CPU cost for each page access. Of course, not every workload is dominated by accessing data in pages – some are more compute heavy on the returned data. However, in these days when I/O bottlenecks can easily be removed, it is worth considering if the extra CPU cycles to save space are worth it.

It turns out that it is possible to also show another expected results: that locks are held longer when updating compressed pages (Thereby limiting scalability if the workload contains heavily contended pages). But that is the subject of a new blog entry.

VM-ware Shared folders are really Slow

I am currently waiting for some code to compile and found a bit of time to type up a quick blog.

As described in a previous post, I am have set up my laptop to host Windows in a virtual machine guest with Mac OX (Mountain Lion) as the host.

Today, I needed to compile some code and thinking I was being clever, I put the code on the host OS (OSX) and shared the folder via VM-ware to the Windows guest OS.

Compiling from inside VM-ware

I ran my msbuild process from the shared folder, and it took FOREVER to compile. The obvious choice here is of course to blame virtualisation itself – after all, I only have 2 cores allocated to the virtual.

But not so fast! Our tuning knowledge comes in quite handy here. Have a look at the CPU pattern while I am compiling:

Just like with SQL Server, I start my tuning at a very high level (in this case, task manager) and dig in from there.

The first question we ask ourselves as tuners is: Does what I see make sense?

In this case, it obviously doesn’t. MSBUILD is set up to build highly parallelised, it should be using my cores and there are no obvious I/O bottleneck in the system. Having 50% of two cores busy (and with high kernel times) looks a lot like a single threaded bottleneck to me. The build was taking over 15 minutes, which was much longer than expected.

Diagnosing the Problem – our friend Xperf

Normally, I use xperf to troubleshoot servers. But it sure comes in handy for misbehaving client machines too.

Task manager only shows that the time is spend in the process d.exe – which is part of the build process. Is the compiler bad or must we look elsewhere? Sure would be surprising if the compiler used all the kernel time wouldn’t it?

Here is the quick and dirty CPU “zoom in” xperf command to get the details we need:

- xperf –on latency –stackwalk profile

- …wait a bit

- xperf –d <myfile>.etl

This captures a sample of the stack and CPU usage of each process and kernel module. From here, it is quite clear what is going on – let me walk you through the analysis.

First, open the trace with xperfview. I recommend staying with the Win7 version of xperfview, as the Win8 interface is… well… a Win8 interface.

Pick the CPU Sampling per CPU, right click and choose Summary Table:

From here, pick the columns: Module, CPU and % Weight which allows you to summarise by module. On my box, it looks like this:

Aha!… Most of the CPU burn goes in vmci.sys (just ignore intelppm.sys). This isn’t a part of Windows. Its relatively easy to trace this file back to VM-ware.

So, who calls into this kernel module? Adding the stack column after the module, we can see that too:

Eureka: It is file system access that is causing the slowdown. See the call stack? Starts from GetFileAttributesW and ends up inside vmci.sys.

Fixing the problem

Now, before you go ahead and conclude that VMware adds a horrible overhead to I/O, lets just try to move the source files into the guest OS itself. Recall that my machine is using VM-ware shared folders to access the source code. It might simply be the sharing framework that is acting strange…

The results of using the guest OS’s file system is staggering. Running the build process now looks like this at the CPU level:

And the total build time is down from over 15 minutes to less than 3 minutes.

Thank you xperf…

At this point, we are reduced to guessing what is going on

One Million IOPS on a 2 socket server

Today, using Fusion ioMemory technology, I worked with our team of experts to hit 1M 4K random read IOPS on Windows. We did this on a 2-socket Sandy Bridge Server.

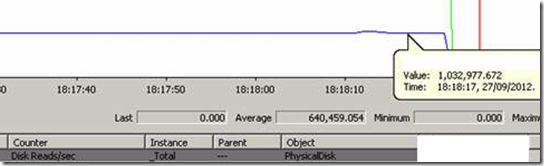

Below is the screenshot to prove it:

Think for a moment about what it will take to actually make use of all those IOPS. If this is the type of speed you have at your disposal, maybe it is time to rethink what is possible. Check out our SDK at http://developer.fusionio.com for the leading edge work Fusion-io is doing in this space.

As I am sure my regular readers can guess, I am loving my new job!

Joining FusionIo

I am very happy to announce that I have signed a contract with FusionIo and will be joining them as CTO of EMEA from 1st September 2012.

I am very happy to announce that I have signed a contract with FusionIo and will be joining them as CTO of EMEA from 1st September 2012.

As many of you know, I have worked together with FusionIo on many occasions and really enjoyed the collaboration. I believe that their products hold the keys to a new era of computing and it is an honour to join their ranks. I will be looking forward to doing a lot of exciting research and customer implementations for them.

This brings me to the work I have been doing since I left Microsoft. Here is how it will transfer:

Consulting Contracts

I have contracts with some customers open. These are all due to terminate before 1st September and I will of course honour my agreements here. Unfortunately, my new job will not allow me to continue the collaboration with these customers on a consulting basis after this. The good news is that my courses will still be available and I will be able to share my knowledge through this channel.

Courses and Conferences

Contributing information to the community is one of my great passions in life. FusionIo has allowed me to continue to pursue this interest. My courses will still be available, although only for a very limited amount of days every month as the course time will be coming out of my vacation days (hint on how to get a discount). I expect demand to be high. There are already three tuning courses set up across Europe which will be held as planned and a lot of people have made it clear they want more. I will be announcing the exact dates for courses planned on this blog soon and let you know how to join the courses that are open to the public. The material is looking amazing and is using the new format that has evolved at SQL BITS and driven the top scores there. I expect this will be my best presentations yet. I am also happy to announce that my data modelling course is well underway and will be available soon.

I will continue to submit abstracts for conferences and stay in close touch with the community, just like I have always done. And this brings me to:

Grade of the Steel

I am very excited that FusionIo has an interest in expanding the testing I have done with my Grade of the Steel Project. I will continue to run benchmarks on the latest and greatest storage and provide non volatile memory specific configuration and tuning guidance. Exactly which format the publications will take is too early to say, I will keep you posted on this blog.

What is the Best Sort Order for a Column Store?

As hinted at in my post about how column stores work, the compression you achieve will depend on how many repetitions (or “runs”) exist in the data for the sort order that is used when the index is built. In this post, I will provide you more background on the effect of sort order on compression and give you some heuristics you can use to save space and increase speed of column stores. This is a theory that I am still only developing, but the early results are promising.