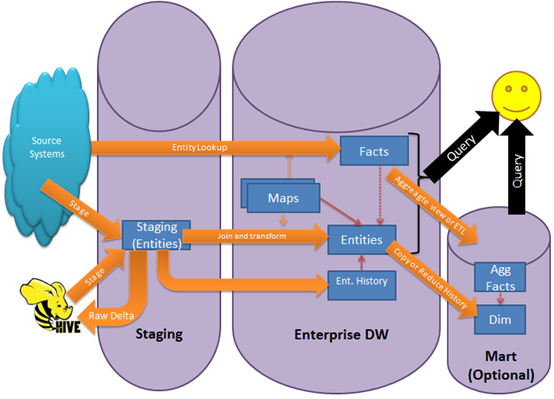

The Big Picture – EDW/DW architecture

Now that the cat is out of the bag on the Kimball forum, I figured it would be a good idea to present the full architecture that I will be arguing for. I was hoping to build up to it slowly, by establishing each premise on its own before moving on to the conclusion.

But perhaps it is better to start from the conclusion and then work my way down to the premises and show each one in turn.

Here is the architecture and data flow I will be arguing should form the basis of a healthy, performing, flexible and solid foundation for an enterprise:

Method, at a High Level

The basic method for building this is:

- Model your data as Kimball would have done it. But ONLY model Type1 history in the dimension. The dimension form the entities (but not the entity history)

- If you believe snowflakes are easier to maintain, go ahead and normalize the entities, but do so using standard OLTP methods (you are allowed to have 1-m keys)

- Use the maps as described earlier to maintain key invariance

- For the attributes you want to history track, add a entity history table and add only the attributes you want to track (if not, you get into some interesting ETL issues later)

- If users want fact tables with type2 history, either create a mart for them (using the join technique I will describe), materialize a view, or allow them directly on the EDW using joins that a slight more complicated than a traditional star schema.

- Users who only need type1 history can simply use the EDW directly

Major choices

There are some major Kimball choices that must be made to make this model work

- The grain is always the lowest possible level. This means that the facts in the EDW (though maybe not in the mart, if you use it) are copies of the source transactions. This gets rid of any complaints about aggregating the fact and losing data

- The world is dimensional, and we are letting users lose on the EDW itself

- We allow either normalized or pure star schema dimensions/entities. Our choice in this will depend on our target database engine and required performance.

- We are using maps to keep the entity keys stable. This minimized the need for reload of facts

- We are assuming that it is easy to reload entities/dimensions and change their structure (as long as the key remains stable). If you are having trouble handling a billion row customer table, ask questions on this blog as we move forward.

- Facts contain all the data of the source. If there is “garbage” in the source we will split that out into a vertically partitioned table off the fact and put it on slow (i.e. cheap) storage.

- Facts are fully de-normalized – there are no big table/big table joins here

What happened to my ODS/Vault/Staging/Archive etc?

One thing to notice is what is NOT in this picture: There is no ODS, Vault or any 3NF databases. The reason is that I am of the opinion that you don’t need one, at least not one that has a data model you care about. I previously said that I would make the point that you should not bother modeling the source, it is a waste of time. This is an argument that needs a lot more backing, more about this later. But a few observations:

I acknowledge that there are auditing requirements which may drive you towards a “zero information loss” model, and that the EDW is often tasked with solving this problem. Note that zero information loss is achievable in the model above, with a few tweaks to the Kimball model. Here is how:

One of the things we can leverage today is the new HADOOP type storage systems (like HIVE or Cassandra). These systems finally deliver on the promise of truly cheap storage – no big SAN storage racks involved: cheap hardware all the way down to the disks. They can run massive archives on SATA spindles, maintain multiple copies of the data and run ad-hoc analysis with high block size I/O (giving you reasonable speed, even on SATA) at record low cost/TB. They are not (yet) super fast at responding to ad-hoc queries at high user concurrency, typically taking many minutes or hours to respond. But responding to ad-hoc queries fast and providing the “current business view of the truth” is what the EDW is all about.

In this architecture we use the HADOOP both as storage for raw copies of the source system and as a source itself (for example for web log data). HADOOP is the mother of all stovepipes and it lets us bring back old data if required to do so, satisfying the auditors and de-railing the argument about keeping too much data around just for this purpose. In other words: it leaves us free to model the EDW to only contain data that we know is needed to answer business problems and throw the rest away. We don’t want to understand the source or model it (except for the purpose of ETL’ing from it for the exact structures we need). We just make sure we don’t lose the source, if it cannot guarantee zero loss for us.

In this architecture we use the HADOOP both as storage for raw copies of the source system and as a source itself (for example for web log data). HADOOP is the mother of all stovepipes and it lets us bring back old data if required to do so, satisfying the auditors and de-railing the argument about keeping too much data around just for this purpose. In other words: it leaves us free to model the EDW to only contain data that we know is needed to answer business problems and throw the rest away. We don’t want to understand the source or model it (except for the purpose of ETL’ing from it for the exact structures we need). We just make sure we don’t lose the source, if it cannot guarantee zero loss for us.

If we have them, analysts are also free to hack away with ad-hoc queries on raw source data in HADOOP, in the table format and model they are used to see in the source, without having to disturb the source or rely on some new vocabulary or over normalized model technique.

Moving forward (or back) from here

I am fully aware that I make some grand claims, and of course, you should not believe me until you have seen the arguments, the design considerations, the safety measures, the methods, the raw numbers and calculations.

But this is a start for the debate and a framework for fleshing out these arguments.

References:

Master Data Management?

I agree with the design Thomas. History tables should be used in a EDW for dimension key changes. The EDW, like you say, should not copy the source systems but should have the granularity of the source systems so that you can remodel the marts when needed.

The second part of what to do with transactions in the fact table that change is also a good design. In bank systems you always have the traceability requirement that never allows you to do overwrites.

Put all records that change in history tables in the EDW. Separate the dynamic part from the static.

Ayyan: Was that a question? 🙂

:), Yes, that is a question of where is the MDM in the data warehouse architecture?

Because, EDW performance can be improved using MDM. and what is your view in it?

Thomas: Agree. The static and dynamic parts of fact tables can either be split into separate partitions or even separate tables. In SQL Server, I prefer to use tables to split up data “fluidity” and partitions to split up the values in the table. I then put a view on top of them to present a single view to the user.

There are also cases where it makes sense to have the “fluid” data in a specially designed table structure (for example, you may want it in a FULL logged database and have the static data in a SIMPLE mode)

Will you be in the Gothenburg user meeting?

No, I think it is an upstart meeting for a new section of SQLUG in Gothenburg. See you in Stockholm in November. Many great design discussions here !

Ayyan: The MDM feeds the Map tables as described in my blog post about those.

I am curious to know how you can use MDM to improve EDW performance?

During ETL process, We spend lot of time in cleaning data along with managing master data. if we use MDM, it can obviously improves the data loading performance of EDW.

Ayyan: In my experience, cleaning dimension data (which is what the master data feeds to you) is about 1-2% of the total load time – the rest goes to fact loads.

I DO take you point that having a master data repository makes this dimension/entity loads faster – but I dont think it matters much in the total batch window. Just remember: if you get clean data from the MDM system – someone else spend time cleaning it for you 🙂

Hi Thomas,

Thanks for the post. It’s great to have a reference like this when substantiating claims of the redundancy of an Inmon-style 3NF EDW architectures. I fully agree with it, and I hope that more architects will read this post, think about it, experiment a little, and ultimately come up with more decent designs, which work…skipping the “enterprise-class” rubbish which I tend to hear way too often often these days, including from people working with 100k fact tables.

Thanks a lot for the comments everyone. As you can see, there is practically a book in here that needs to be written (though I think Kimball has done most of the hard work already).

I am curious to know which parts of the architecture above are most in need of some arguments and numbers? This would help me prioritize the order I write my next posts.

I’d start with:

1. Historical tables

2. Garbage data / partitioning

Many would also doubt whether a denormalised DW will be working alright (e.g. performance, storage requirements, etc.) instead of a 3NF one. I think a strong anti-3NF argument with real stories of massive failures is way overdue. I know this has been a much debated topic, but it would also be interesting to compare two implementations head to head – one where we have a 3NF DW, and another one where we don’t. Both should be similar and built by guys who know what they are doing. Then, we could check whether the performance, maintainability, scalability and extendability has benefited/suffered from either model, how long it took to build it an the overall cost.

I guess it’s a nice opportunity for a whitepaper, no idea about a whole book 🙂

Really nice article !!

When worlds talks for architecture i can’t stop myself !!

So nice presentation and Diagram !!

Just one comment which can’t be as part of Beautiful Discussion (Becoz i am nothing !! )

Comment on Architecture :- Architecture is Art of Domain applying perfect best Technology in consideration of handful Resource with limited Money and in given area of Time which helps to live life easy and happy for Developers,PM’s,Customer,Users and of course not but the least Own Architecture Satisfaction

What that I think !! 🙂

good article and good comment

Hi Thomas,

Nice article .. but:

I wonder what the problems are that you want to solve with this architecture.

On first hand it seems related to scalability and performance.

Do you think these are the big issues in datawarehousing today?

Hi Marco

Thanks for the compliment.

First of all, I do believe that both scale and performance are crucial to the success of a data warehouse. Without them, you might as well not build a DW. And, I know that many people struggle with getting it right, especially those who blindly acquire MPP solutions, believing “magic scale” will solve their problems for them.

But no, this architecture aims to to tackle more than scale and performance. A major problem today is maintainability and extensibility of a data warehouse. While 3NF Inmon style or Data Vault implementations promise to make this task easier, they just lead to even more complexity in the delivery layer, where you least want it. It is my belief, and the core of my argument, that a traditional Kimball style warehouse, with some minor adjustments, makes for the most maintainable and easily extensible data model.

With regards to many of the other challenges of warehouses, for example: relying on poorly designed and expensive “logical reference models”, data quality issues, master data management and the organizational challenge of managing such a “single version of the truth” project. I don’t believe the data model or architecture can solve those, and I am skeptical of the models that claim they do. However, by having an agile model (and I will argue that my proposed model is very agile indeed) – you can at least respond faster when business requirements inevitably change over time, and iterate towards a good solution faster as business users learn about their own data.

There is also an interesting requirement about tracking history, Which I think it is clear it is my belief is orthogonal to the data model. Later, I shall elaborate a bit on how you handle history in the ETL flow out of the archive.

I wrote a previous blog entry about good data models you might find interesting.

HI Thomas,

I agree that maintainability and extensibility are the bottlenecks. ‘Our’ inability to quickly respond to changing business requirements drives ‘our’ backlog and business wishes for self-service.

Automation of data warehouse creation and maintenance is i.m.o. the only long term answer.

(A persistent staging area in the form of a Data Vault seems the best option to tackle this – but I like to hear arguments against that claim)

I agree that automation is the answer (and it has to come from a good meta data model).

The good news is that a star schema is super easy to automate like this, once you have settled on a good naming convention. The automation in this case happens in the ETL code – not in the data model. My claim is that there is no reason to “land” in an intermediate model – or in other words “modeling the source is a waste of time”.

The basic idea: show me your desired end result and I will generate the ETL code for you.

Hi Thomas!

100% aggree on “The basic idea…” I tend to write views, aka staging or ETL views, which map and twist the source data to what the dimension/fact tables looks like. If it cannot be done within a view, I create a materialized version of somewhere in the middel between the source layout and the dimension/fact table layout.

It’s what it’s all about, work hard in the ETL, and your EDW model will be simple and clean. The problems you are not solving in the ETL, will be there to hunt you in the EDW.

I agree that a star schema can be automated easily. In fact I’ll do a session on sqlbits on oct 1: Creating a Meta Data Driven SSIS Solution using Biml.

But my point is that once you have automated your data warehouse development there is no extra effort to support this persistent staging layer with all the added benifts. (of which some are covered in your model)

I don’t agree there is no extra effunless you also automate query plan hinting, expert level tuning and re-modeling the source to 3NF. And all this just so you can transform it into a star schema again.

But for the argument, Let us say I grant that there is no extra effort in building an intermediate layer. You are still left with the question: what benefit does this extra layer grant that is not already in the star schema?

And if there is any benefit, does that measure up well against the duplication of data, the need to learn a new vocabulary, the increased load latency (you have to “land” an additional time), the additional memory spend for the joins, the extra CPU cycles, the increased skill level (and if one is to follow Dan: money for “certification” in Vault), the additional naming conventions and translation between them, the strong coupling to the source system that is implicit in Vaults, the loss of hash join plans and the poor use of column stores. These are pretty major things to compromise on, so there better be a damn good argument for building an additional data model.

I have yet to see someone describe a use for Vault/Inmon 3NF that is not based on misrepresentation of the problems a star schema already solves. I am very curious to hear your argument (since Dan seems to be too busy learning about operating systems and databases to respond in the Kimball forum 🙂 )

Sent from my C64

OK, Let’s make some assumptions:

• There is no extra effort creating the Data Vault (automated)

• Users only query the data marts

• The extra join pain you described is only applicable to the delta load from Data Vault to data marts

Then I see only one real disadvantage: Extra storage to support the additional layer.

Now let us take a look at the advantages of this extra layer. Which can be compared to the same reason we use different layers is software programming: Separation of concerns.

Where Data Vault is all about the back-room, efficiently collecting, integrating and preserving data from the source systems. Dimensional modeling is all about the front-room, publishing the organization’s data assets to effectively support decision making.

The first advantage is obvious and has to do with demands of our auditors and other data governance enforcers: Data Vault will be able to recreate the source at a given date/time.

A second reason has to do with keeping control of / reporting on data quality issues in the source systems. Including those we solved in the data mart area.

The third reason has to do with agility. The ability to respond to changes rapidly. Small changes like changing a dimension attribute from type 1 to 2 or adding additional attributes to a dimension. But also large changes like big organizational changes, mergers, new ERP implementations. By having a persistent staging area (preferably integrated on business keys) it’ll be far easier to respond to these changes and rebuild the data marts (with history). Resulting in quicker, better reporting after such a change.

The fourth reason has to do with the old data warehouse adagio: Single version of the truth. You already changed that to “Current version of the truth” . In Data Vault we use the term “System of facts” since everybody can have is own truth.

An example: Suppose there is a company with a server division that has a small cloud department. Changes are that in the future this will be changed into a cloud division with a server department. The CEO who always lives 5-10 years ahead wants to see his truth in the reports he receives.

BTW I don’t advocate to publish everybody’s truth .. but some can come in handy.

Of course (just as in programming) you can solve some of these problems with patch work in one layer. And you have to weight these advantages against the extra costs.

Greetinx

Marco

..

Should there be a dotted line from staging to mapping and from entities to mapping? I look forward to seeing you co-present in a couple weeks

Well spotted Eric and thanks.

I will be creating new drawing (that will also look at lot better, because I am switching to another drawing tool)

An interesting architecture posted over at Klout: http://corp.klout.com/blog/2011/11/big-data-bigger-brains/

Hi Thomas,

I think I agree with most of this but I think you have a lot of detail to fill in the gaps. Specifically:

“If users want fact tables with type2 history, either create a mart for them (using the join technique I will describe), materialize a view, or allow them directly on the EDW using joins that a slight more complicated than a traditional star schema.”

…is somewhat akin to Fermat scribbling that the proof is too large to fit in the margin.

Also, I’d like to know what conditions you think this may not be appropriate for e.g.

* Low latency

* Reactively managed systems – i.e. if we see a business key in transaction data we will infer its existance until the dimension is updated, no-one will be around to create mapping tables by hand and mapping corrections will be done after the data is already loaded into the fact tables.

Thanks,

James.

Hi James

Absolutely agree that there is a fair amount of “Fermat margin scribbling here” (and great expression by the way). You bring up three major points that I still need to cover in more detail.

The first one, about the complex type2 reconstruction join, requires me to first show WHY those joins are complex (and what they do to the optimizer). This will lead me to conclude the materialization in a mart is the only truly feasible solution. This is of course only the outline of a proof. But as it happens, this is my next major blog entry, and I hope not to disappoint you. I will look forward to discussing this

The second one, about inferred keys, I think I can answer shortly here (but it may be a large enough subject for another blog entry): If you already know how to create a type2 or type1 inferred member using Kimball, then doing the same for a map table inferred member is trivial. Of course, creating inferred map entries opens you to Dolly Keys and Multi Key from other maps that infer the same member, at least until a user approves the map entry you created. But that is a minor detail and one that I dont believe has a good solution.

The third, real time design, is much more subtle. I happen to have designed a fair amount of real time systems, so I have some experience to share. One of the things I think is true in that space, is that there is less room for schema flexibility here. The closer you go to real time (and I mean seconds, not minutes) the more it that you need a relatively fixed schema. I am not sure there is CAP theorem here, but perhaps there is. The real-time space is a very large area to cover, but one that I hope to attempt at a later date. Showing how this model fits real time will clearly not a be a hand waving “margin proof”.

Also, another question:

“For the attributes you want to history track, add a entity history table and add only the attributes you want to track (if not, you get into some interesting ETL issues later)”

For attributes you want to track the history of do they exist only in the history table or is there a “current version” in the main entity table? If the latter when do you write a history row – as soon as possible or only when you need to store an old value?

I prefer to always keep the “current” version in the entity table. Typically, the storage and CPU costs of keeping these tables around is trivial. If you can make it easy to query data with a trivial investment, by all means do it.

Hi Thomas, great post (as all are, usually) but would be really important to continue the explanation on datamart build (especially when you have facts which are incrementally loaded and are related to a dim with SCD2 and you face an SCD2 capture change – with the addition of a new historical atribute) – in the diagram there is only an arrow between Facts and Entities (for the current version) and a confusing arrow which says “Copy and Reduce History” which only comes from Entities). More detail would be great!

Kind Regards

Hi Fernando

You are tight, more detail is needed and there are a series of posts I will eventually do that will dig into it. I a. Currently working on some slides that show the idea in full, and once they are ready, I will turn them into blog posts too.

The super short answer is that you store the fact keyed to the entities table in the “EDW” – because you do this, they are stable with regards to history (entity tables don’t change, even when history does). History tables (which you may have more than one of per entity) can be added and removed as your requirements change (they are keyed to the entity, but nothing is keyed to them).

When you load the mart, you do a “controlled between join” that turns the entity key into the history key (which is what Kimball just calls the “surrogate key”). This last join is what turn the business decision of history tracking into a physical model. Because we have stored data in a compressed format (linked to the entity, small key) we can perform this join as cheap as possible.

Hi Thomas,

I can’t wait for your discussion on the history join – I’m just laying down the ETL patterns for our new EDW at BNPP this week so it couldn’t come soon enough 🙂

I’m particularly interesting in how you are planning on populating a Type II Dimension and associated Facts in SSAS with this join. I can’t seem to get past the Fact having the wrong key (the entity key instead of the correct history key) – even with partitions there seems to be a very large join somewhere.

James.