Good keys, what are they like?

A central value add of data warehouses is their ability to restore the sanity that comes from using good keys. Taking a model-agnostic view of keys, they refer to “something” that is uniquely identifiable. Depending on what you are modeling, those “somethings” have different names, for example: entities, rows, tuples, cells, members, documents, attributes, object instances, and relations between the any of the latter. Because this article is about relational databases and because I don’t want to move up to the “logical” level with you (as I promised before), I will use the term: “row” as the “something” that a key refers to, and the term “column” as the data structure that implements the key.

Moving on, the definition of a “good key” is a column that has the following properties:

- It forced to be unique

- It is small

- It is an integer

- Once assigned to a row, it never changes

- Even if deleted, it will never be re-used to refer to a new row

- It is a single column

- It is stupid

- It is not intended as being remembered by users

Unique

It follows from the definition of a key that it is unique. But documentation that this is so, does not make it a key: An automated mechanism must be put in place to enforces uniqueness. This could be a constraint or a unique index.

Small

A key should be small, a few bytes at most. This makes it efficient to retrieve rows and to join on the key.

Integer

A key should be an integer, always. I have previously blogged about why this is a good idea.

Never change

You should never update key columns or change the column that is the key. First of all, it creates a mess of anything that depends on the key (which may or may not be enforced with referential integrity). Secondly, updates of key columns can have bad performance implications since the database has to do a lot of book keeping to keep the table consistent

Never re-use

Keys should never the re-used – even if the row they identify has been deleted. This turns out to be a crucial requirement in data warehouses, because we may not always be able to track all the dependencies between tables in a automated manner. This means that the warehouse or user reports may be in a state of partial inconsistency, and this is ok and normal. However, if rows exist that refer to an old version of the key, those rows may magically reappear in user reports and end up pointing to the wrong values.

It follows that key columns should be small (as in 2), but still large enough to honour this requirement.

Single column

It is bad practice to use multi-column keys. First, it is difficult to express foreign key constraints on them. Second, it makes the SQL required for accessing the key (and joining on it) more complicated and error prone to users (and programmers). Third, multi column keys makes it harder to write automated code that accesses the model (including code that auto loads the model). Fourth, it is more difficult to express histograms on multi-column keys, which makes the query optimizer more prone to generating bad plans.

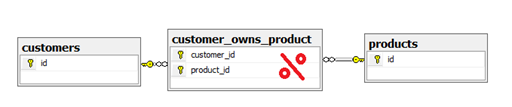

I even apply this principle to tables that implement relationships between other tables. This is best illustrated with an example. I recommend against this design:

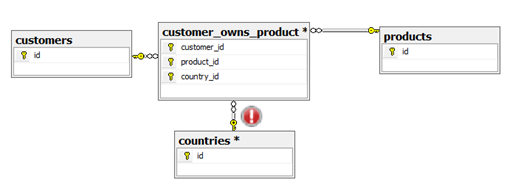

In the above example, the relationship table implements a unique relation between two tables, and the key is used to enforce the uniqueness of the relation (“a customer can only own a product once”). But this is not flexible! What if I open stores in multiple countries? Apple iTunes is a good example of such a case. Now, the customer may own the product in multiple countries like this:

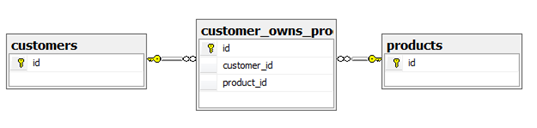

This wreaks havoc with any code that refers to the relationship itself (since the key has now changed, violating 4) above. Instead, I recommend this design that does not suffer from the problem:

If you wish to enforce the uniqueness of the relationship (a business rule, not a key) then you can declare a unique index on customer_id, product_id.

Stupid

A key should not contain information about the row it refers to. This would be a violation of the rules of database normalization. While you may not agree with the rules of normalization (and there are good reasons to disagree), consider this: If the key contains information about the row it refers to – “smart” programmers may get the idea that code could be written that would use the value of the key to implement business logic. But what happens if someone changes the row values and the key no longer reflects the other columns that is a correlated within the row? Then the code is wrong, and you will have programmers begging/ordering you to change the key (violating the principle of “never change”). Any dependency on specific key values in business logic is generally bad news for your ability to implement changes. Keep the keys to yourself and don’t tempt programmers to get smart about them.

Teflon

You generally don’t want users remembering specific primary keys in the warehouse. This is the teflon principle: “nothing sticks to it”. As we shall see later in this series, we reserve the right to make changes to the way keys are linked up to fact tables if we change our mind about history tracking. You don’t want reports to refer to your keys by their value when this happens. Imagine a data warehouse that has 50.000 reports referring to the fact tables (this is not unusual) – if any of those reports refer to a key in a fact table, and you make changes to history tracking, you will have users screaming.

It is worth pointing out that a table may have secondary keys which users refer to in queries. This is perfectly fine, you may even declare the secondary key as unique too. Ideally, users should be using the primary key on the table only for joins, and fetch unique rows by some known, secondary key. For example, locating an invoice by the source system invoice ID may use the source system key directly, but you will maintain a different primary key.

The one exception to the teflon principle, is keys for date and time. It is a reasonable assumption that “time does not change” and you may get away with generating these keys in the integer format YYYYMMDD and HHMMSS. Incidentally, this makes queries on date and time easier to write and the data easier to partition.

Related Reading:

Category: DW and Big Data

Thomas,

Can’t see a single thing in there that I would disagree with, apart from I tend to use the word “meaningless” rather than “stupid” 🙂

On the subject of integer keys I would be interested in your thoughts on some of the questions I pose in this blog post: http://sqlblog.com/blogs/jamie_thomson/archive/2009/10/14/considering-surrogate-keys-for-unknown-members.aspx

Specifically, what do you start the numbering at for your keys and what do you think of using -1 for the Unknown member?

cheers

Jamie Thomson

Hi Jamie

Good to hear from you – hope you are well. I used to practise the “-1 is unknown” principle too. But I have abandoned it. Not just for aesthetic principles, but for some (I think) good reasons.

The first realization is that there is often more than one “unknown” in a dimension – for example, you may have “blank”, “corrupt”, “invalid code format” etc… If you end up in that situation and you make the keys “smart”, you are faced with building a very complex logic into the keys and this is just bad for maintenance.

Then there is the issue of using TINYINT data types (for very small dimensions). Those don’t even have negative values. One could argue that this is a niche case.

I also dont agree with your idea of using -1 as the default member. I am all for forcing NOT NULL columns in the dimension keys – I recommend it as a design principle because it makes table scans faster and joins more efficient. But, if you want to have unknown member keys as the default, that is a job for the ETL flow. It is business logic, and therefore belongs in a place where you can track metadata. I think one of the reasons that I see the -1 is unknown a lot in Microsoft implementation is that frankly, there there are only rather clumsy ways to convert NULL to unknown in the data flow tasks. There really should be a standard component to implement this very common ETL task.

A final reason, and a design principle that I am applying a lot more these days, is the -1 values dont allow me to have “globally unique dimension keys”. If you follow this design principle, all dimension keys are INT or BIGINT, not matter the size of the dimension. Each dimension table still generates it’s own keys, but with an offset that is guaranteed not to overlap with keys in other dimensions. This has the advantage that if you get your join columns wrong, you will always return an empty result instead of a wrong result. This is good for usability. Using large interger columns like this off course wastes some space – but that it not such a big deal if you have bitpacking or column store abilities in the database.

Instead of having the “negative is unknown” convention, I will instead mark the unknown member (or members) with a an additional bit column in the dimension: IsUnknown = 1. This also opens a design pattern where every late arriving key can be marked as “unknown” (allowing a LOT of unknown values), and when/if they key arrives, it then become “known”, without updating the fact table.

Hey Thomas,

Good to know I’m not the only who detests the implied “-1 means unknown” – just a shame that this has (seemingly) become the convention in the SQL Server space.

“Each dimension table still generates it’s own keys, but with an offset that is guaranteed not to overlap with keys in other dimensions. This has the advantage that if you get your join columns wrong, you will always return an empty result instead of a wrong result. ”

This is interesting – I had never thought of doing this. I guess as an extra precaution there is no reason not to do it – I might add that to my “toolkit”.

I have seen implementations where, as you say, there is more than one unknown member – “Unspecified” is one that seems to pop up a lot. Where I have seen this I have often also seen the convention being extended to “-2 means unspecified”. Worse still IMO! (Of course – it does raise the question as to whether these facts are actually being stored in the wrong fact table – but let’s leave that one for another day).

“Instead of having the “negative is unknown” convention, I will instead mark the unknown member (or members) with a an additional bit column in the dimension: IsUnknown = 1”

Now this is something I generally don’t do for the simple reason that there is no way of constraining it (i.e. what’s to stop an undisciplined developer setting multiple rows with [IsUnknown]=1?) and that bothers me a little. On the other hand I do like the idea of using it for late-arriving members.

I have toyed with the idea of having a single-row table in my warehouse that has a column per dimension table holding the ID of the Unknown member of the respective table – I haven’t ever implemented it though.

Lots of food for thought, thanks Thomas. Loving the series so far – please keep it up!

JT

I am continually browsing online for articles that can facilitate me. Appreciate the amazing post. I am glad you took the time to post it. Thanks

I have been surfing online more than 3 hours today, yet I never found any interesting article like yours. It’s pretty worth enough for me. In my view, if all website owners and bloggers made good content as you did, the net will be much more useful than ever before.

I completely agree with everything written, Thomas, as you may already know. The only exception is in what you and Jamie (Hello!) said in the comment regarding the negative values in dimensions to identify unknown members.

I actually use and like the idea of having negative values to represent unknown members. This approach allows for quickly identification unknown dimension members in fact table without having to join it with dimension each dimension, making debugging of data checking very easy and fast.

I always put at least two values to quickly identify missing information. One to identify the fact the that information is known to be missing (for example you also have a NULL value in the OLTP source and you), the other to identify that a connection between the fact and the dimension could not be made due to the fact that the ETL phase is not able to find a match (erroneous data, incoherent time intervals and so on). There is no need for complex logic in order to do so; surely not more complex if the number would be a positive one.

By defining some “standard” unknown values and forcing it to be negative, I can also be sure that there is a common way to handle such situation in all the BI team working on the project.

Of course when you have to deal with late-arriving facts it’s a completely different story, but this is something that falls in the “exceptional situation” for me, which has to be handled differently from the “general” approach.

Btw, very good and interesting post/blog. It’s nice to have a place where to discuss such arguments 🙂

Hi Davide

Your ability to quickly identify unknowns is exactly what Jamie and I are against. This means that you now get hardcoded references to surrogate keys into the reports and ETL flows. I simply don’t think there is such as thing as a “general unknown member”. You identify two potential candidates, but there could be more (for example: you may distinguish between “data out of range” and “data not recognized as valid key”). And what if some dimension don’t have all types of unknowns, would you still allow all the different “types of unknown keys” in there? That could potentially lead to joins not being valid.

Therefore is I prefer to have a bit column that indicates that the member is unknown instead of putting intelligence in the key. If you want “quick debugging” to find unknown keys, it is a small matter to write a view that is pre-joined, exposing all unknown values.

Hiya Davide,

Interesting to see a comment wholly in favour of -ve IDs for representing UNKNOWN. I’m not surprised of course – clearly there needs to be a way of signifying UNKNOWN and there are definitely benefits for using -ve numbers.

Great to see this being discussed openly here. Long may it continue!

JT

Let the battle begin indeed.

A question then: If you let 0 (or -1) signfify the unknown member – do you then put that key in the dimension table?

Absolutely yes, always add the unknown/none member into the dimension. Otherwise there’s no consistency in their attributes from report to report. It also maintains referential integrity. Don’t want a report writer to miss facts simply by using an inner join!

It also means you don’t have to hard code 0/-1 into report logic, getting around one of your reasons for not using them.

Thomas,

I think that Identity column can match the 8 properties for a “good key”, what do you think?

Do you have concerns about the Identity flexiblity during ETL, manual changes, insert orders or others?

Latest question, probably too specific to datawarehouse scenario, any best-practice regarding “Good Key” if you are design a Dimension Table or Fact Table?

Hi Andrea

Yes, I do indeed think that identity columns meet these criteria. But as you will see later (watch for my “grade of the steel” blogs), you may not be able to generate them fast enough.

With regards to identity flexibility, could you provide an example of what you mean? If the key is “good” then the order you insert rows in should not matter.

I am not sure I follow you question on dimension/fact table. Could you elaborate please?

Hi Thomas,

thanks to confirm that Identity column can be good enough solution.

Regarding dimension/fact table I’d like your opinion about the order that rows are read by SSAS during processing.

We know that “row order” is important to have best compression/small fact datafile. Is the “good key” enough to obtain a “good compression” or we have to “play” with index to force different order?

Andrea, these are very different problems.

The “good key”, Assuming it is an integer, will naturally make the fact table smaller as it makes the table narrow too. But the sort order is different beast and that discussion is a bit out of scope for this particular blog article.

However, you may want to have a log at Alberto Ferraris blog in the link section, he is currently investigating it

Hi Thomas

Some very interesting points here, thanks for the blog (and for the interesting comments Jamie, Davide & Andrea)!

I’ve never found a compelling enough reason to not use -1 as the unknown member, aside from personal preference, however your point about late arriving keys is absolutely spot on. I’ll definately consider using the IsUnknown flag in my next design.

Having said that, I think there is still a valid reason for using predetermined keys when dealing with the ‘None’ case. If a fact record simply does not and should not have a link to a dimension member then I link it to the 0 member. For example an insurance policy may not have been sold through a broker, it therefore shouldn’t link to a business record in the Broker dimension. I create a ‘0’ member for this purpose.

Unknown and None are very different cases with different business meaning (although they are combined into the -1 key all too often in my experience). You’re right in that you can have many different ‘unknown’ members, but surely there can only ever be one ‘none’. Similar to the case of using YYYYMMDD with dates, the meaning of ‘none’ can’t ever change, so is there a problem using a hard coded key?

Hi Alex

Thanks, I am really happy to see the discussion going on now. With regards to the “none” member – which one of them do you mean? The NULL, the blank string, the string with only spaces, the CHAR(0) ? 🙂

Very good point. They are different cases from a data perspective, but from a business perspective they are identical, so should they not be conformed in the warehouse into a single member with a single meaning?

Alex: ARE they the same from business perspective? What if you want to join the fact back to the source to check that you load the right result? Why force the choice?

I know it is far fetched, but consider the alternative, generic solution: Just construct a view on top of the fact table and use that to find the unknown.

I miss the good argument FOR the -1 is unknown – whereas the flexibility of the IsUnknown = 1 is free. Not sure I buy that “I will have to write one less join” as a good enough reason.

If conceptually and logically they all mean ‘None’ then yes, I’d say they should be treated as identical and the warehouse should conform them together. To reconcile the data you’d just compare all ‘none’ data together, not differentiate between the different sources of ‘none’.

If there is a valid reporting need to differentiate between the ‘none’s then they cease to become logically equivalent, and should be kept seperate, but that’s defined from the reporting requirements, not defined from the technical approach.

As for the argument FOR the -1, I would say it’s normally used because of the absence of an argument against it. Something this blog entry may change…

[This means that you now get hardcoded references to surrogate keys into the reports and ETL flows.]

No, no I would’t do such thing 🙂 Even if I have a negative number that indicates me that a fact row has an unknown value for a dimension, I’ll always go for the JOIN/LOOKUP in reports (if I’m building reports directly on the DWH and not on a SSAS Cube)

For ETL flow I can just use the knowledge that if in a fact row I have a negative dimension id, I know that that row is not related to that dimension, for any kind of reason.

The advantage of this approach is that no join as to be made to the dimension in order to know if a fact row is related to an unknown dimension element or not.

Of course if I need to know details about this “missing relationship” I have to join fact with dimension in order to correctly decode the dimension id value.

[I simply don’t think there is such as thing as a “general unknown member”. You identify two potential candidates,]

Sure I agree with this. That’s why all dimensions should have an “unknown member”. (With Id = -1 in my case)

[“types of unknown keys” in there? That could potentially lead to joins not being valid.]

Agree again, and again that’s why each dimension, beside the “standard” -1 Unknown member, should have specific unknown members. For example for the Time dimension we use -2 for Wrong Date, -3 for Incoherent date and so on.

>That could potentially lead to joins not being valid.

Mmm…I don’t see how I can have invalid joins in that way. Even with negative dimension ids all fact rows will have an exact match to the dimension they use, so nothing will be missed.

Davide, I dont see how you will avoid hardcoded references. While you may not hardcode it, other report developers will – and then you are stuck with the key.

T>[I simply don’t think there is such as thing as a “general unknown member”. You identify two potential candidates,

D>Sure I agree with this. That’s why all dimensions should have an “unknown member”. (With Id = -1 in my case)

Doesn’t that contradict with you agreeing with me? 🙂 If there is no general unknown, why are you then creating one?

D>Mmm…I don’t see how I can have invalid joins in that way. Even with negative dimension ids all fact rows will have an exact match to the dimension they use, so nothing will be missed.

Yes, but don’t you then assume you have the SAME unknown members everywhere? Say you allow -2 in dimension A, but not in dimension B. What is to prevent some “smart” developers from hardcoding -2 in dimension B by accident as part of the ETL flow? I generally don’t like a convention that may lead people to make assumptions that are invalid.

I won’t have any key floating around report since facts will be joined with dimension and the filter will be on dimension one or more dimension attribute which is not the key, of course. It may be the element name or – as you also suggest – an attribute that tells if that element specifies a known or unknown condition. (In the latter case we don’t use bit but a tinyint that allow the categoriziation of several “exceptions” to the data. Beside unknown values there also known “bad” values 🙂 that must be identified and reported to the customer in order to help to raise data quality 🙂 )

My point is that having negative dimension ids does not imply that you’re going to hard-code value everywhere. They’re just an additional “tool” that can help and if used correctly, like any tool, doesn’t harm, but – instead – bring some, even litte, added value.

T> If there is no general unknown, why are you then creating one?

I thought that for “general unknown” you mean to have the assumption so that “-1” is always the unknown member, so there is no need to have it created in each dimension. That would surely make the joins harder.

That’s why I said that I agree that this approach is not the one I would follow 🙂

But having all dimension with their own unknown member is fine for me. The fact that the id of that member is positive or negative is irrelevant. For this I can choose to have a negative one, since this can become relevant during and only during debugging, to make it quicker.

T> What is to prevent some “smart” developers

Ah I see your point 🙂 But that’s another question, and, as you know, I think that rules and their application is what save us from this problem.

Btw, there should be a way to simulate also virtual (good) wine, since this interesting discussions are much better with a glass of good wine in the hand 🙂 🙂 🙂